|

waLBerla 7.2

|

|

waLBerla 7.2

|

Implementing an iterative solver, initializing fields, and setting Dirichlet boundary conditions by functions, domain setup with an axis-aligned bounding box. File 05_SolvingPDE.cpp.

After learning the basic concepts and data structures of waLBerla, we can now already implement our own solver for an elliptic partial differential equation, discretized by finite differences. The regarded equation, describing the behavior of the unknown \(u\), is:

\[ - \frac{\partial^2 u(x,y)}{\partial x^2} - \frac{\partial^2 u(x,y)}{\partial y^2} + 4 \pi^2 u(x,y) = f(x,y) \]

with the right-hand side function

\[ f(x,y) = 4\pi^2\sin(2\pi x)\sinh(2\pi y) \]

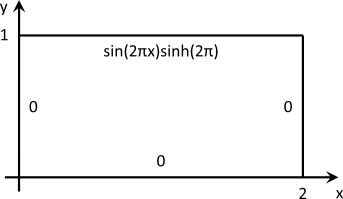

and the domain is the two-dimensional \( \Omega = [0,2] \times [0,1]\). The boundary conditions are of Dirichlet type and zero everywhere except for the top boundary, where:

\[ u(x,1) = \sin(2\pi x)\sinh(2\pi) \]

This results in the following set-up:

From basic numerical courses, we know that this equation can be discretized with finite differences which yields the following discretized equation, valid for a Cartesian grid with uniform spacing in x- \((\Delta x)\) and y-direction \((\Delta y)\):

\[ \frac{1}{(\Delta x)^2} \left(-u_{i+1,j} + 2u_{i,j} - u_{i-1,j}\right) + \frac{1}{(\Delta y)^2} \left(-u_{i,j+1} + 2u_{i,j} - u_{i,j-1}\right) + 4\pi^2 u_{i,j} = f_{i,j} \]

The resulting linear system of equations ( \(\mathbf{A}x=b\)) can be solved with either a direct or an iterative solver. Probably the simplest variant of the latter is the Jacobi method which applies a splitting of the system matrix in its diagonal and off-diagonal parts to come up with an iterative solution procedure. This finally allows to write the method in a matrix-free version, given by:

\[ u_{i,j}^{(n+1)} = \left(\frac{2}{(\Delta x)^2} + \frac{2}{(\Delta y)^2} + 4\pi^2\right)^{-1}\left[ f_{i,j} + \frac{1}{(\Delta x)^2}\left(u_{i+1,j}^{(n)} + u_{i-1,j}^{(n)}\right) + \frac{1}{(\Delta y)^2} \left(u_{i,j+1}^{(n)} + u_{i,j-1}^{(n)}\right)\right] \]

Coming back to the concepts of waLBerla, this is a perfectly suitable example for a sweep. It requires summing up weighted values of the neighboring cells together with the function value of the right-hand side, which is then divided by the weight of the center cell. To avoid data dependencies, i.e., reading neighboring data that has already been updated in a previous step, two fields (source and destination) are used like in Tutorial - Basics 3: Writing a Simple Cellular Automaton in waLBerla. After the sweep, the data pointers are swapped. A possible, but not optimal, implementation of this procedure is shown below. The class has private members srcID_, dstID_, rhsID_, dx_, dy_ that store the BlockDataIDs of the source, destination, and right-hand side field, and the grid spacings \(\Delta x\) and \( \Delta y\).

This is already the entire implementation of the Jacobi method! As always, there are of course various other ways to achieve this. The stencil concept utilized by waLBerla allows a very convenient (since flexible) and also faster implementation with the help of neighborhood iterators and stencil weights. The performance problem of the above implementation lies in the fact that the prefactors are recomputed for each step and each cell. Since they are constant, it is a better idea to precalculate them and store them in a weights vector.

A new class JacobiSweepStencil is implemented that takes this weights vector and stores it as its private member weights_. The actual sweep, again implemented as a functor, can then be written as:

At this point, another very common performance optimization strategy can be applied: to omit the costly division by the center weight in the last step of the Jacobi sweep, this weight is already stored in inverse form to allow a fast multiplication later on. The constructor of this class then looks like this:

And the respective line of code in the Jacobi sweep has to be changed accordingly:

Despite being faster, the implementation using the stencil concept is more flexible than the hard coded variant from the beginning since it can be used for different stencil layouts. Say you use a different, probably higher-order, discretization of the PDE and come up with a D2Q9 stencil. Then, simply change the typedef to typedef stencil::D2Q9 Stencil_T and add the new weight values in the weights vector accordingly. The implementation of the JacobiSweepStencil class stays untouched and the simulations can readily be carried out.

This functor is now added to the time loop object as in the basic tutorials and the simulation can be started. At the moment, however, the simulation is very boring since the right-hand side is zero and so are the boundary conditions. It is easy to check that then \( u(x,y) = 0\) is the trivial solution. In the next sections, the functions to initialize those missing parts of the problem are shown.

In the first step of the sweep, the values of the rhs field are accessed. This field, of course, has to be initialized first, according to the given function f. This involves the evaluation of the physical coordinates of the cell centers to get the proper values for the x and y coordinate. Such a initialization function might look like this:

With the help of the CellInterval object, an iterator that loops over the cells can be generated. The physical coordinates are then found by the getBlockLocalCellCenter function, with the block and the cell as arguments, and are stored in a three-dimensional vector p. This allows to obtain the x-coordinate with p[0] and the y-coordinate with p[1]. Thus, the function f can be evaluated at the specific cell.

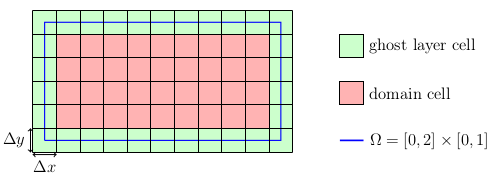

In contrast to the basic tutorial, the domain is not periodic but has fixed Dirichlet boundaries on all sides. In waLBerla, it comes in naturally to use the ghost layers around the fields to store the Dirichlet values. Those are then accessed automatically in the sweep which loops only over the cells in the interior of the domain. This is carried out in the following function where only the values at the top boundary are set since the others are already initialized with zeros:

The principle action of setting the values is the same as shown for the right-hand side before. Notable, however, is the modification of the CellInterval object to yield an iterator that only loops over the ghost layer cells at the top boundary, obtained by yMax().

As mentioned before, the values stored in the cells are regarded as being placed at the cell center. This introduces a slightly different way of setting the domain as one might be used to from node centered layouts. Our goal is to set the centers of the boundary cells, i.e. the ghost layers, directly at the Dirichlet boundaries. Since the ghost layer is an additional layer that is added around each block, the domain we have to set up is actually smaller than \(\Omega\). Convince yourself that this is correct by looking at the targeted grid layout:

Thus, the computational domain (red) is \(\Omega_C = [\Delta x/2, 2-\Delta x/2] \times [\Delta y/2, 1-\Delta y/2]\). A convenient way to specify such a domain is the usage of an axis-aligned bounding box (aabb):

In waLBerla, all simulations are in principle in 3D. A two-dimensional set-up can be achieved by choosing only a single cell in z-direction with a finite cell size, here dx. The creation of the blocks is then carried out with another variant of the already known createUniformBlockGrid routine:

Putting everything together, setting up the communication scheme for the D2Q5 stencil and adding everything to the time loop, as known from the basic tutorials, the simulation can be carried out.

In the next tutorial of the PDE section, Tutorial - PDE 2: Solving the Heat Equation, the Jacobi method is used to solve the linear system of equations arising from an implicit time-stepping method for the solution of the famous heat equation. It is also shown how to include the functionality to stop the simulation based on the norm of the residual, which is very often used to terminate the iterative solver.